by Maria J C Machado.

Earlier this year, I did a lot of research on methods. I was tasked with preparing the “methods” class from a workshop on writing research papers in the life sciences. I summarized the bare bones of it here.

My advice to avoid desk rejection due to an unclear methods section is to:

- Organize the methods section using subheadings. This structure can help to break up an information dense section and make it easier to follow. You can use subheadings to group related procedures or to highlight key steps in your experimental design.

- Describe the methods in the same order as the results as much as possible; this logical progression makes it easier for readers to understand the sequence of events and how the experiment was conducted.

- Use parallel grammatical structures, which help to ensure that all necessary materials and methods are included and properly explained. Furthermore, including methods, materials, and data availability statements in papers and publications is highly recommended and may be needed.

The main purpose of this class was to address the reproducibility crisis. A shockingly minute amount (approximately 11%) of the scientific findings in academic-led preclinical studies could be replicated in an industrial setting.

The main reasons for this were poor experimental design, incomplete/selective reporting, and suboptimal quality assurance, which have been reported an issue some time ago and continue to produce harms.

These findings are highly concerning because these studies inspire the industry to develop drugs, which are subsequently tested in humans via clinical trials. Recommendations within the publishing industry focus on transparency, as it creates trust and is the key to ensuring credibility.

However, what is reproducibility?

When new data are obtained in an attempt to reproduce a study, the term replicability is often used, and the new study is a replication or replicate of the original study. Repeatability was defined as the repetition of the experiment within the same study by the same researchers.

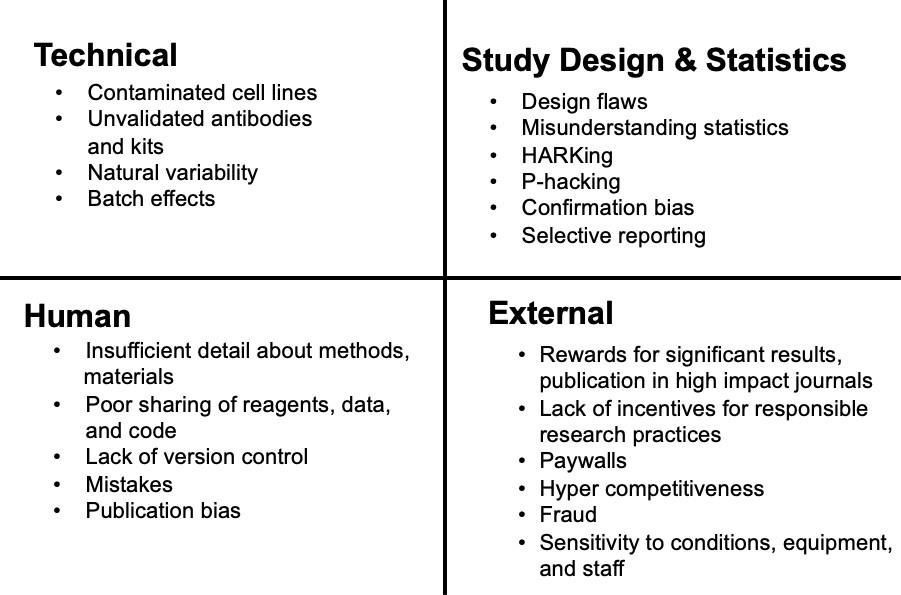

Reproducibility in the original sense is only acknowledged if a replication performed by an independent researcher team is successful. The confounding factors that may hinder the reproducibility of an experiment can be grouped into four categories:

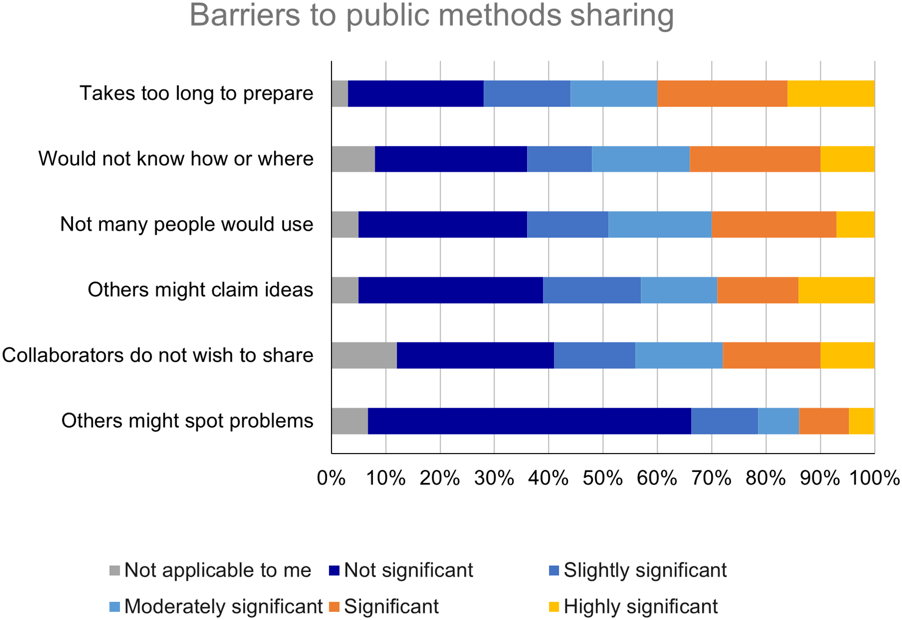

Detailed protocols need to be shared among research groups. The first problem lies herein. According to a survey of researchers’ methods sharing practices and priorities, conducted between March and May of 2022, the most significant barriers to public sharing of methods were found to be lack of time and lack of awareness about how or where to share (see figure below).

Additionally, barriers to public sharing of detailed method information were perceived to be of low to moderate significance. Therefore, the need for knowledge sharing about protocol sharing is flagrant… R4E does just that. This community initiative shares resources from general practices on reproducibility to data visualization tools and management strategies. We will discuss their importance during Love Data Week 2024.

Nevertheless, public sharing still revolves around (citing) publications. While peer-reviewed protocol journals (such as Nature Protocols, protocols.io, or Bio-protocol) offer wonderful publicly available resources, the methods used are not protocols.

According to the PRO-MaP guidelines, efficient reporting of the design provides information on ethical approval, handling of outliers, and biological and technical replication. Samples should be unambiguously described, including a clear definition of the unit of study.

Whether for “classical” publications or preprints, study design is a determinant for planning the choice of appropriate controls, minimizing bias, determining sample size, and performing statistical analysis. It is essential to conduct and write up the study to ensure that all important information is recorded. Importantly, this approach enables the evaluation of the research by reviewers.

Steps to improve the methods section have been proposed by the participants of Love Methods Week. The main changes to current practices would facilitate scientific review and reduce the time wasted on unproductive tasks by reviewers. If you are a journal editor, please consider:

- removing word limits,

- clarifying expectations around textual novelty (aka, plagiarism),

- adopting guidelines for the use of shortcut citations,

- recommending the use of reporting guidelines for specific study designs.

Do you struggle with data analysis and/or presenting your data and methods in articles? We have a range of services in the Reviewer Credits Reward Center which can help. Click here to learn more about statistical analyses and consultancy services to help with data presentation and analysis.