by Maria J C Machado.

I peer review a lot, mostly papers that follow an IMRaD format. By far, the section where I have the most comments and observations, the one that annoys me the most, is the Methods section. This is also the section of an academic paper where rejection (or negative comments) are most likely to come from reviewers and editors.

We need to change this: According to the organizers of Love Methods Week:

- Methods are among the most valuable outputs that researchers create. In many fields, others may be more likely to reuse —and cite— your methods than your data.

- Reproducibility starts with methods. If others don’t know what you did, they can’t reproduce your research.

- We cannot reuse open or FAIR data responsibly if we do not know how they were generated. Methods need to be shared with the data to facilitate reuse.

The methods are also the section in which fraudulent papers produced by papermills or completely generated by AI-based language models more easily skip editorial checks. This is often where subject matter expertise is the most needed, as individuals who have theoretical knowledge but have never performed said experiments (or who are similar in nature) could easily miss tortured phrases.

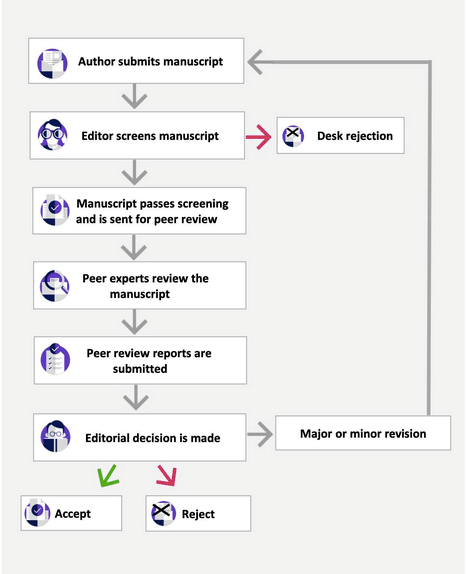

Thus, once again, an overview of the peer review process is presented below, including the checks that should be surpassed until a research paper is published:

Does this mean that different experts should review the methods and results sections?

Let us get back to this later in this post… I do believe that professors far removed from the bench should more often delegate to their postdocs and PhD students the task of peer reviewing (while supervising them). This type of collaboration should be rewarded, incentivized, and acknowledged in academic life and curricula. However, the journal editor should always be aware and approve this beforehand, to exclude for potential conflicts of interest.

As I have already discussed, we are all biased. This means that the most common type of implicit bias on the parts of the peer reviewers are still towards the country of origin of the author or of the corresponding author, their affiliation to specific institutions, their gender but also their identity —and this bias can be both positive or negative. Open peer review does not compromise the process, at least when referees are able to protect their anonymity.

Preprint servers have an enormous role to play in the evolution of peer review and open or transparent peer review is advancing steadily along, while decoupled or transferable peer review seems to have stalled. Is this because there is no incentive to review if no relationship is formed with editors? At least in 2013, ego did not seem to intrude on the peer review process. Is this still true today and in all fields?

The importance of the research question and the quality of methodology is emphasized when peer review is done in two stages, in the format of registered reports.

The most important part and differentiating characteristic of this process is that assessing whether the authors have prespecified sufficient outcome-neutral tests for ensuring that the results obtained are able to test the stated hypotheses (including positive controls and quality checks) is performed at stage 1, before data is collected and the research complete. Stage 2 focuses mainly on ensuring whether the authors’ conclusions are justified given the data.

Registered reports are well used in clinical research, where clinical trials need to be registered as part of the ethical approval process. Nevertheless, they are not always needed.

I often see papers at stage 2 and have comments that could have been addressed at stage 1, so I find this staging time saving, for both authors and reviewers. Although a different editorial workflow is needed for publication, this workflow is likely useful.

How does Reviewer Credits help with this process?

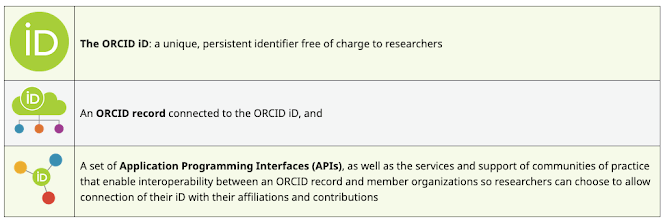

Reviewer Credits matches journal articles to peer reviewers using AI. So, if you register as a reviewer and share your expertise, perhaps by linking your ORCID record, then you’ll get matched to articles that fit your expertise.

Most peer review activities can be linked to a specific journal (not a specific paper) through an ORCID identifier. The thing is, this is transferable between affiliations, and is linked to other services and records, through use in scholarly publications and editorial activities.

I could update my ORCID record to showcase my blog and link it to my personal email account, not an institutional email one that I would have eventually lost access to in one of my many moves across institutions and countries.

No more worrying about spam emails from suspect publishers! You will get matched to articles that fit your expertise. Knowledge and experience with particular methods, such as statistical analyses, can be flagged on your profile and then shared with journals.

Reviewer matching also works well for journals, as extra peer reviewers can be sourced when needed, to check analyses and methods such as statistical approaches. Often, reviewers in the Reviewer Credits database are not linked to publishers directly, which enables us to provide independent training and support.

All of this allows a staged approach to peer review, which means that methods and analyses can be more effectively reviewed for publication.

International Love Data Week is coming up! Don’t forget! #LoveData24