Why a metric for Peer Reviewers? What Reviewer Contribution Index (RCI) is for? How does RCI work?

Francisco Grimaldo is Associate Professor of the School of Engineering at the University of Valencia and co-author of the article “The F3-index. Valuing reviewers for scholarly journals”. He was the main speaker of the webinar for launching of Reviewer Contribution Index (RCI), the novel metric based on the F3-index and developed by ReviewerCredits in collaboration with University of Valencia. This is a summary of his speech, enlighting the main features and benefits of RCI.

Why a metric for Peer Reviewers. We all know that peer review activity deserves recognition and rewarding. But even though there are different metrics to quantify the activity of scientists as authors, it’s not as common to see metrics to quantify the activity of peer reviewers. There are platforms that do this recognition task, but up to now they have mainly followed the approach of counting or aggregating the number of reviews being done.

F3-index: a metric for Peer Reviewer performance. In our opinion, there is room for improvement. Because the paper that we are evaluating when we review a draft is itself defining a context. We all know that reviewing one paper or another it’s not the same, and we think that context is very interesting when creating these metrics, and that these information about the performance can be valuable for editors and journals that can look into the factors they are interested in. So together with ReviewerCredits we have developed a tool that can assist everybody in doing this reward of high performing reviewers.

How F3-index works. What about the “context” I was speaking about? We know that there are papers that are more theoretical, that have more statistics or that focus on methodology, etc. So, basically we thought that we need this relative context and environment to measure the performance. That’s why we thought the index working in the way I’m going to explain. Let’s have two reviewers that are assigned to the same manuscript. The benefits shared to reward them will depend on how much the review is pertinent, informative and timely. So we put three factors or dimensions into play, since we are not measuring the quality but what we are, again, measuring is the activity.

From F3-index to Reviewer Contribution Index (RCI). In December 2018 we present the F3-index through a paper co-written with Federico Bianchi and Flaminio Squazzoni (Federico Bianchi, Francisco Grimaldo, Flaminio Squazzoni, The F3-index. Valuing reviewers for scholarly journals, Journal of Informetrics, Volume 13, Issue 1, 2019, Pages 78-86, ISSN 1751-1577, https://doi.org/10.1016/j.joi.2018.11.007). ReviewerCredits has adopted the F3-index and named it Reviewer Contribution Index (RCI). The idea is to have a metric that ranges from 0 to 100. It will be recalculated once a week to measure peer review activity, that is done mostly on voluntary basis. But we again think that is very, very important to measure and to properly recognize and reward it.

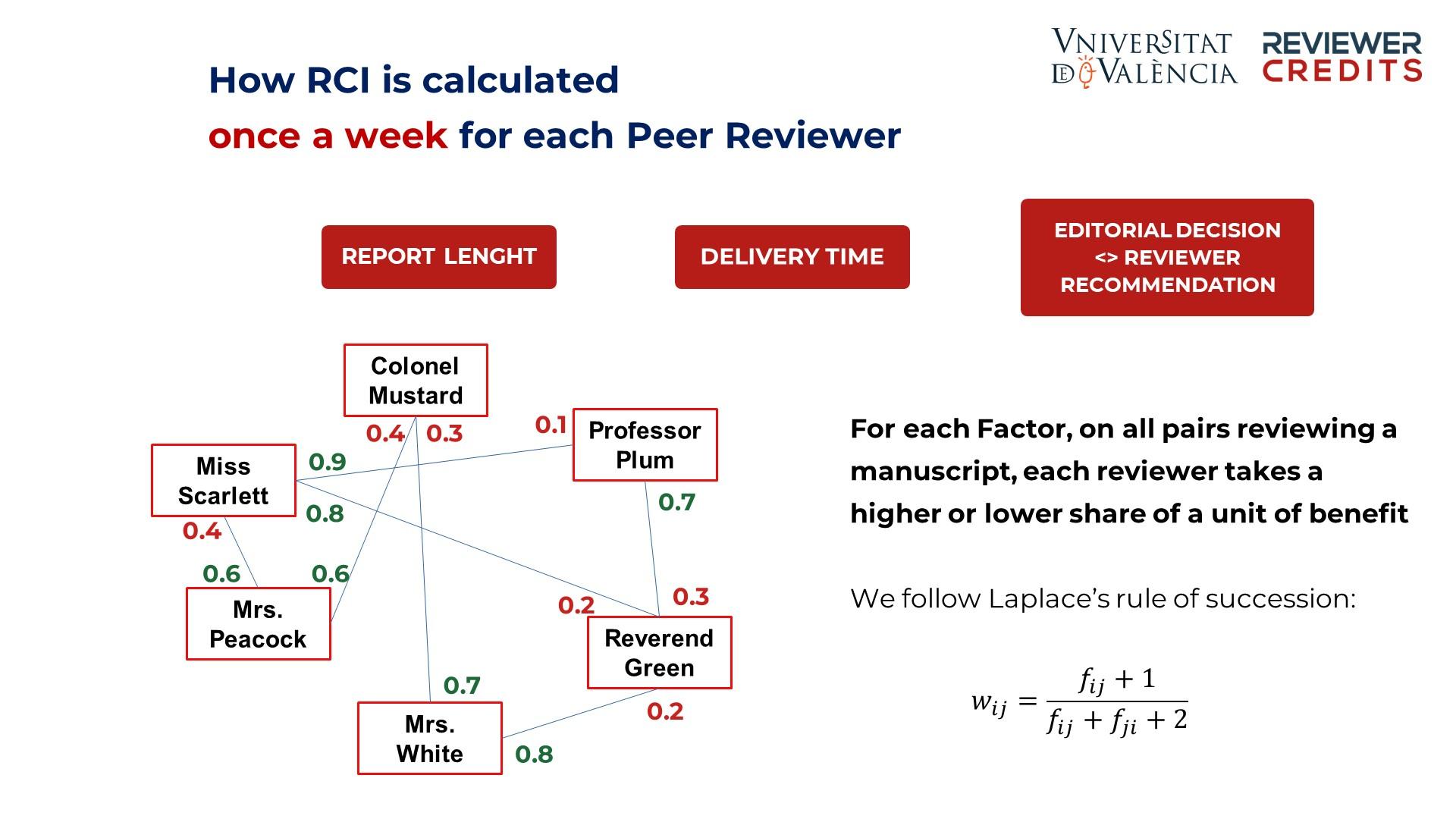

The 3 Factors for the F3-index calculation. Regarding how informative the review is, we use the report length as a proxy. There have been a lot of previous works on this, and it’s interesting to have long reports that inform authors not only about the paper being necessarily good or bad, but also how to improve it, so the development of value of peer review. The other factor is the delivery time, that is the time elapsed since the reviewer accepted the review, and then the report is delivered. And the third factor is the alignment of the reviewer recommendation with the editor decision. This does not mean that other factors could not be introduced or are irrelevant, it depends on the journal editor. We decided to start from three objective factors.

How the F3-index is calculated once a week for each Peer Reviewer. Imagine that we have the characters represented in the image below and let’s put them in pairs every time they review the same manuscript. So, for instance, if we consider Professor Plume and Reverend Green, and 1 as a unit of benefit, Professor Plum will share 0.7 and Reverend Green 0.3. Let me highlight two characteristics of the sample network represented in the image below. So, for instance, see how Miss Scarlet, Reverend Green and Professor Plum are connected. These three reviewers are connected because they actually are reviewing the same paper. So again, a context has been defined through this network. And also see that Mrs. Peacock cannot be directly linked to Reverend Green. That’s important because we don’t need everybody to be connected to everybody because there could be an implicit link connecting all of us.

Interesting properties of RCI. It is robust. There is low correlation between the index and raw statistics, such as the number of reviews being done. It does not penalize newcomers, nor it promotes hyper productive reviewers. It’s resistant to noise, or to one factor trying to lead up to drive the index, so it’s stable, and doesn’t change.

RCI: a transparent box. In the sense that its criteria are clear and straightforward. We have tested it with the Journal of Artificial Societies and Social Simulation and applied it to 1k reviewers. We are using a well-tested rating method, which is the Keener method. And it is the result of a cross-sectoral and cross-disciplinary collaboration that we have within the PEERE Cost Action: New Frontiers of Peer Review and the Project PREWAIT – Advanced Information Tools about peer review of scientific manuscripts (RTI2018-095820-B-I00 MCIU/AEI/FEDER, UE).

Francisco Grimaldo is Vice Dean and Associate Professor of the School of Engineering at the University of Valencia and a Research Fellow at the Italian National Research Council. His research interests are agent-based modelling/simulation, machine learning and data analysis/visualization.

Director of the Capgemini-UV Chair in innovation in software development and Organizer of DataBeers VLC. Member of the Catalan Association for Artificial Intelligence and of the Association for the study of Socio Technical Complex Systems. francisco.grimaldo[at]uv.es